Imagimob's System Application Engineer Lukas Wirkestrand shares how the latest release of DEEPCRAFT™ Studio enables companies to develop Edge AI solutions for their products.

Click here to skip ahead to the full release notes.

The last couple of years have provided powerful AI tools, limited not only for governments and large companies, but even in the handheld devices of the everyday person. Impressive generative agents like ChatGPT and adaptable image classifiers like YOLO provide a route for small companies and entrepreneurs with dreams of start-up stardom. My name is Lukas Wirkestrand, and I am an engineer at Imagimob with a background in Machine Learning. In this technical blog I will cover how the latest release of DEEPCRAFT™ Studios provides that same opportunity - for companies big and small - to bring their Edge AI products into development.

The February release of DEEPCRAFT™ Studio introduces support for the AI Evaluation Kit: a super lightweight PSOC™ 6 board that allows for direct streaming of data into DEEPCRAFT™ Studio from a myriad of sensors.

The board contains a microphone, accelerometer, magnetometer, gyroscope, pressure sensor, thermometer, and radar, allowing you to easily collect whatever data you need for your use case. As a Machine Learning (ML) engineer, I know the challenge of finding solid data and the importance of becoming intimately familiar with your dataset, a process that often takes weeks. Collecting your own data allows you supreme knowledge of what you’re training your model with, but often comes with the limitation of quantity. However, by utilizing the streamlined process provided by DEEPCRAFT™ Studio and the AI Evaluation Kit, data collection can be done by even those without experience in ML - and in a much more timely manner! Below is a short video of me recording data and applying the labels, using DEEPCRAFT™ Studio and the AI Evaluation kit.

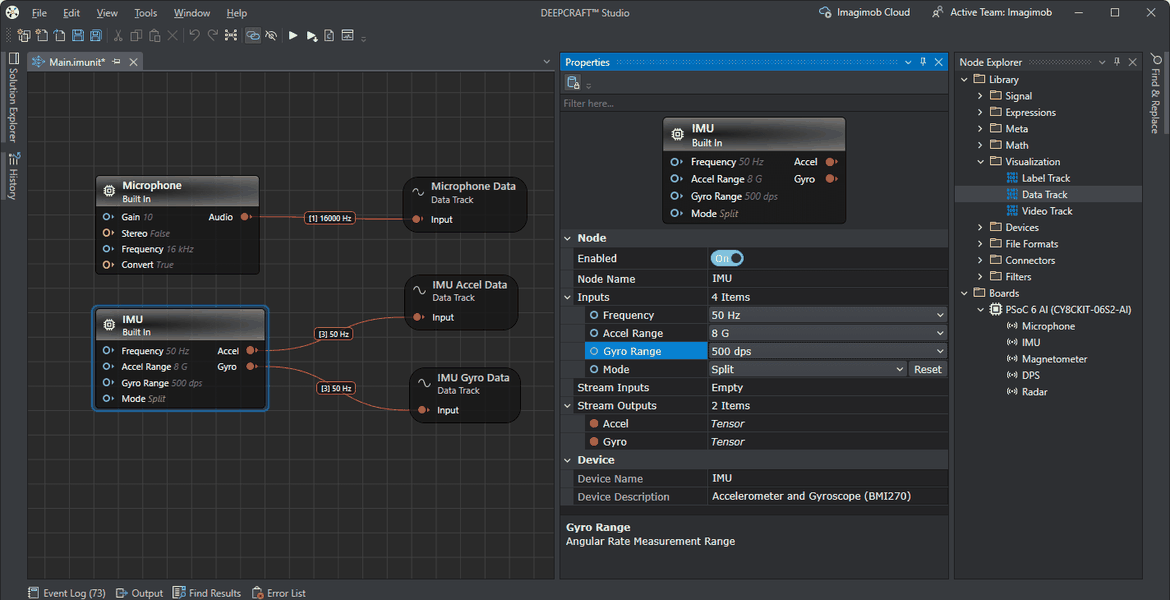

Having one MCU simultaneously collecting multiple sensor data opens the door for many projects, and also lowers the barrier for creating sensor fusion models. Different modalities are capable of capturing different details and trends, but by combining them we are able to create models that harness the benefits of both. Below you can see a Graph UX visualization of the data flow I used to collect data for a dual sensor model using both the audio (16000Hz) and IMU (50Hz) data. This required preprocessing the data to get the sampling frequencies to match. Because DEEPCRAFT™ Studio already has a built-in feature for audio preprocessing, the ‘Mel Spectrogram', I used that to downsample the audio to 50Hz. After scaling and concatenating, the multimodal data can be saved, now prepared for training a sensor fusion model. This example is just one of many that can be done; theoretically, any combination of sensors could be supported, although some are more useful than others.

The benefit of using the AI Evaluation Kit for data acquisition also lies in the ease of on-device model evaluation since it uses the PSOC™ 6. Once you’re satisfied with your model in DEEPCRAFT™ Studio and have generated the hardware-optimized C code, you can flash and test the model directly on the board. You’re never going to have to worry about the potential sensor mismatch of collecting data using one microphone and then placing the model on a board that might have a different one. In fact, you don’t even need to plug in any additional device; you can collect data, train a model, and deploy it onto a PSOC™ 6 all with just one USB-C connection.

The new streaming protocol isn’t just a boon for PSOC™ 6; it’s been developed agnostically to hardware and can just as easily stream data from any sensor providing time-series data! If you’re already committed to a sensor or another board, this essentially means that you only need to develop firmware in order to stream data from it. This can be done by following this guide.

I was able to traverse all the steps of creating a brand new model: from data collection to model building and hyperparameter optimization in just 8 hours. Using just DEEPCRAFT™ Studio and an AI Evaluation Kit, I could forge a proof of concept. Using the new streaming support for the AI Evaluation kit is a game changer in efficiently creating proofs of concept for Edge AI models. More guides to get you started can be found our Youtube. If you’re interested and want to schedule a technical training covering data collection, model creation, and evaluation with DEEPCRAFT™ Studio, contact us here, and we’ll get you rolling!

Here are the full release notes for the February release of DEEPCRAFT™ Studio:

Improved Tensor Streaming Protocol for Real-Time Data Collection

The Streaming Protocol is a comprehensive set of guidelines designed to facilitate the streaming of data from any sensor or development kit into DEEPCRAFT™ Studio. We have introduced an enhanced version of this protocol, known as Tensor Streaming Protocol version 2, to simplify the implementation of custom firmware for data collection and model evaluation in real-time.

Tensor Streaming Protocol version 2 defines a streaming mechanism used for communication between a client and a board. The protocol is intended to work over TCP, UDP, serial port, and Bluetooth communication. This protocol is designed to handle multiple data streams from sensors, models, and playback devices, enabling efficient data transfer and processing in embedded systems. This protcol is based on protobuf3 and provide the DotNetCli test client to evaluate the implementation. Refer to Tensor Streaming Protocol for Real-Time Data Collection to know more.

Tensor Streaming Protocol version 1 is deprecated and we recommend using Tensor Streaming Protocol version 2 for improved performance and easy implementation. However, we continue to support the backward compatibility. This means that if your firmware is currently implemented using Tensor Streaming Protocol version 1, you will still be able to stream data into Studio without any issues.

The PSOC™ 6 AI Evaluation Kit comes pre-programmed with the streaming firmware. Using Tensor Streaming Protocol version 2, we have developed new streaming firmware for the AI Kit to simplify data collection and address known issues. This new streaming firmware offers enhanced flexibility in collecting data from various sensors on the kit at different rates and allows for simultaneous data collection from multiple sensors. To know how to collect data using the new streaming firmware, refer to Real-Time Data Streaming with PSOC™ 6 AI Evaluation Kit using the new streaming firmware.

We recommend flashing the kits with the new streaming firmware to take advantage of these improvements. Note that kits manufactured after February 2025 will come pre-programmed with the new streaming firmware. For instructions on how to flash the new streaming firmware onto the kit, refer to the Infineon PSOC™ 6 Artificial Intelligence Evaluation Kit.

DEEPCRAFT™ Studio Accelerators (formerly Starter Models) are designed to kickstart your Edge AI journey. They are deep learning-based projects that cover various use cases and serve as starting points for building custom applications. The DEEPCRAFT™ Studio Accelerators are open-source and include all the necessary datasets, preprocessing steps, model architectures, and instructions to help you develop production-ready Edge AI models.

You can download DEEPCRAFT™ Studio Accelerators from DEEPCRAFT™ Studio and start fine-tuning them to suit your specific needs. DEEPCRAFT™ Studio offers 3000 minutes of compute time per month, free for development, evaluation, and testing purposes. This provides a valuable opportunity to gain hands-on experience in creating and deploying machine learning models from start to finish. To know how to get started, refer to DEEPCRAFT™ Studio Accelerators section.

Overall bug fixes and increased stability