By Sam Al-Attiyah and Songyi Ma

Imagimob AI can help you get a head start on your project in terms of identifying which classes to focus on in the model building. In this article, we are going to make gesture control come true using the Acconeer radar and Imagimob AI. Specifically, we are going to explore gesture data visualization, visualising pre-processing and gesture selection. We will also show how to do all of these in Imagimob Studio, which is a part of the Imagimob AI package. With the help from Imagimob Studio, you can build and deploy great models by starting you on the right path.

Gesture Data Visualization

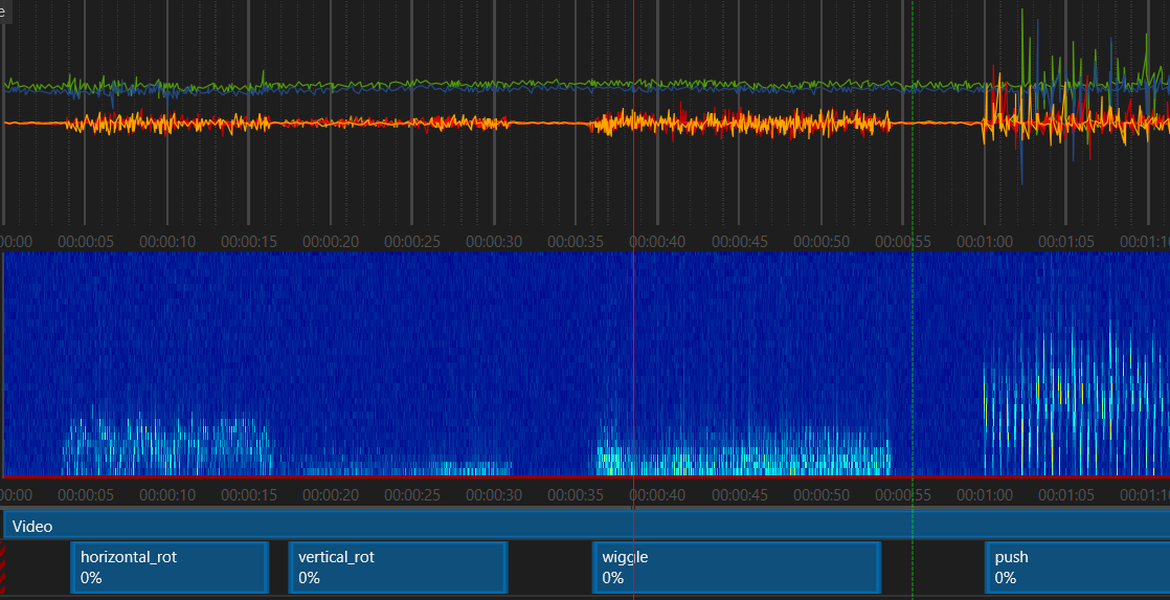

Of course all Machine learning engineers should understand the data before any training. In Imagimob Studio, data visualization as a built-in functionality is amazingly smooth, which happens once you import the data. This gives you a clear idea of the time-series data that is fed into the model for training.

Fig1. Gestures collected by Acconeer sensors viewed in Imagimob Studio.

Visualising pre-processing & selecting gestures

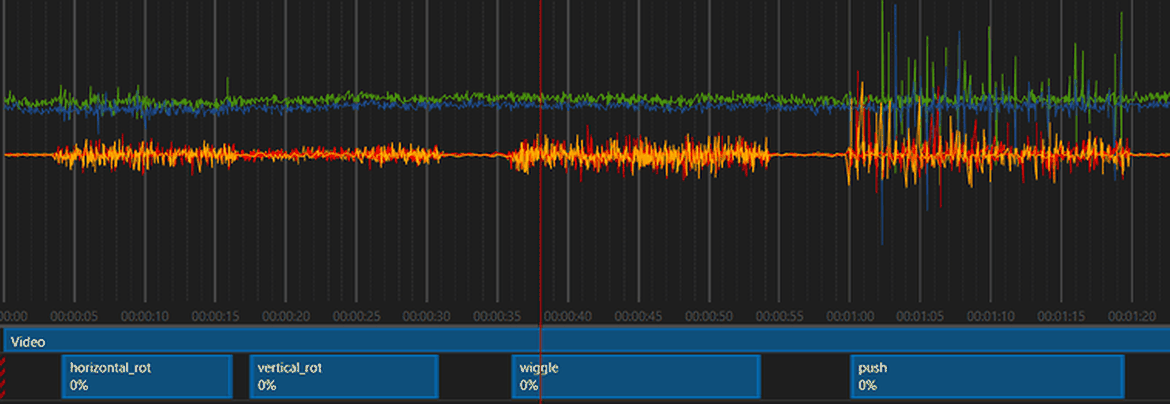

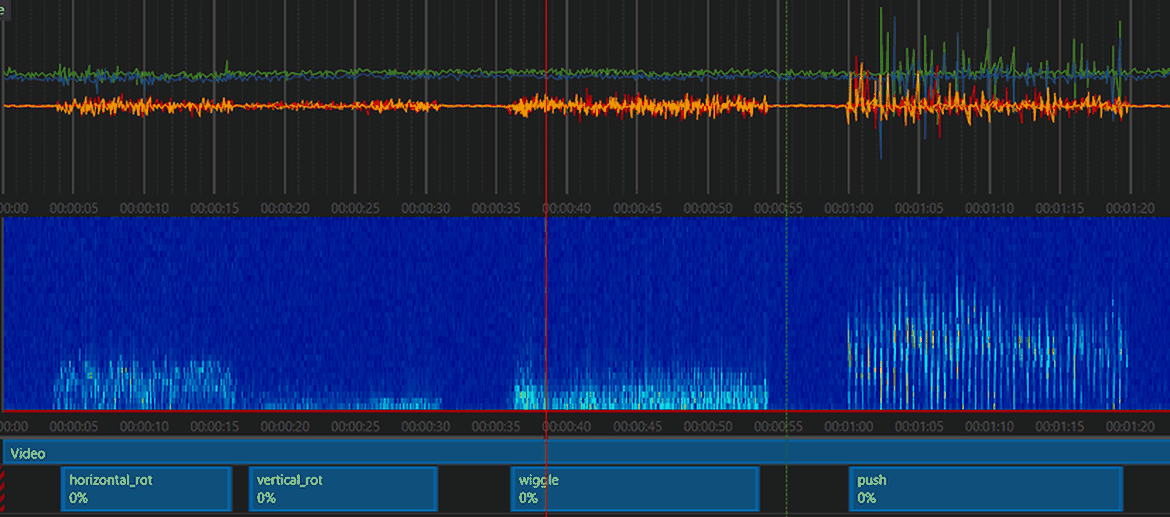

You can use Imagimob AI to visualise your data, but even more powerful than that you can use Imagimob AI to visualise your pre-processing very easily. By visualising your pre-processing you know exactly what goes into your model. This helps you not only improve your model performance but to also identify what events, gestures or classes are easy to distinguish.

This is Imagimob AI’s Create Track from Preprocessing feature and it allows you to circumvent the obstacle of gesture selection. This functionality allows you to visualise your pre-processing so you can ensure that what you pass to the model is distinct and unique for each gesture.

As can be seen in Fig2 that with a given list of 4 gestures, we can easily choose 3 ones that we want to proceed with. We can also already see the model’s potential based on the class differentiation.

Fig2. Gesture selection from different gestures

Visualising Processing in Imagimob Studio

We’ve talked about the benefits of visualising processing, in this section we show you how to do it. To do this in Imagimob Studio, simply collect all the gestures you wish to evaluate into one file. It’s good to collect multiple iterations of each gesture to make sure you get a good overview. Then do the following:

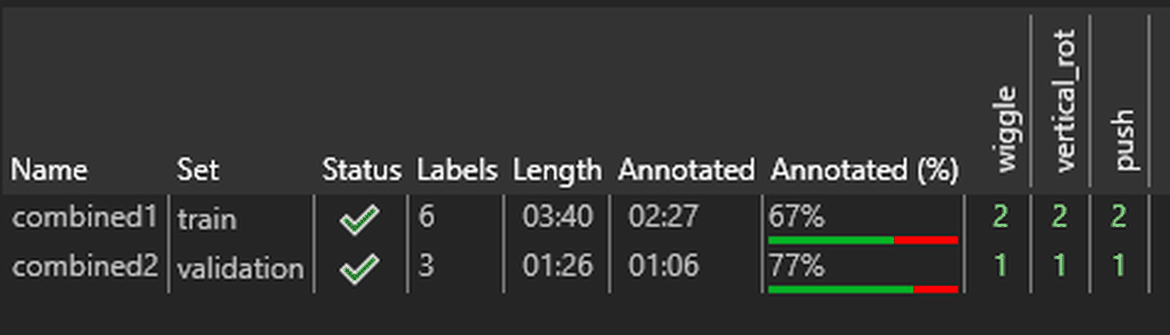

1 Add the data to your project file

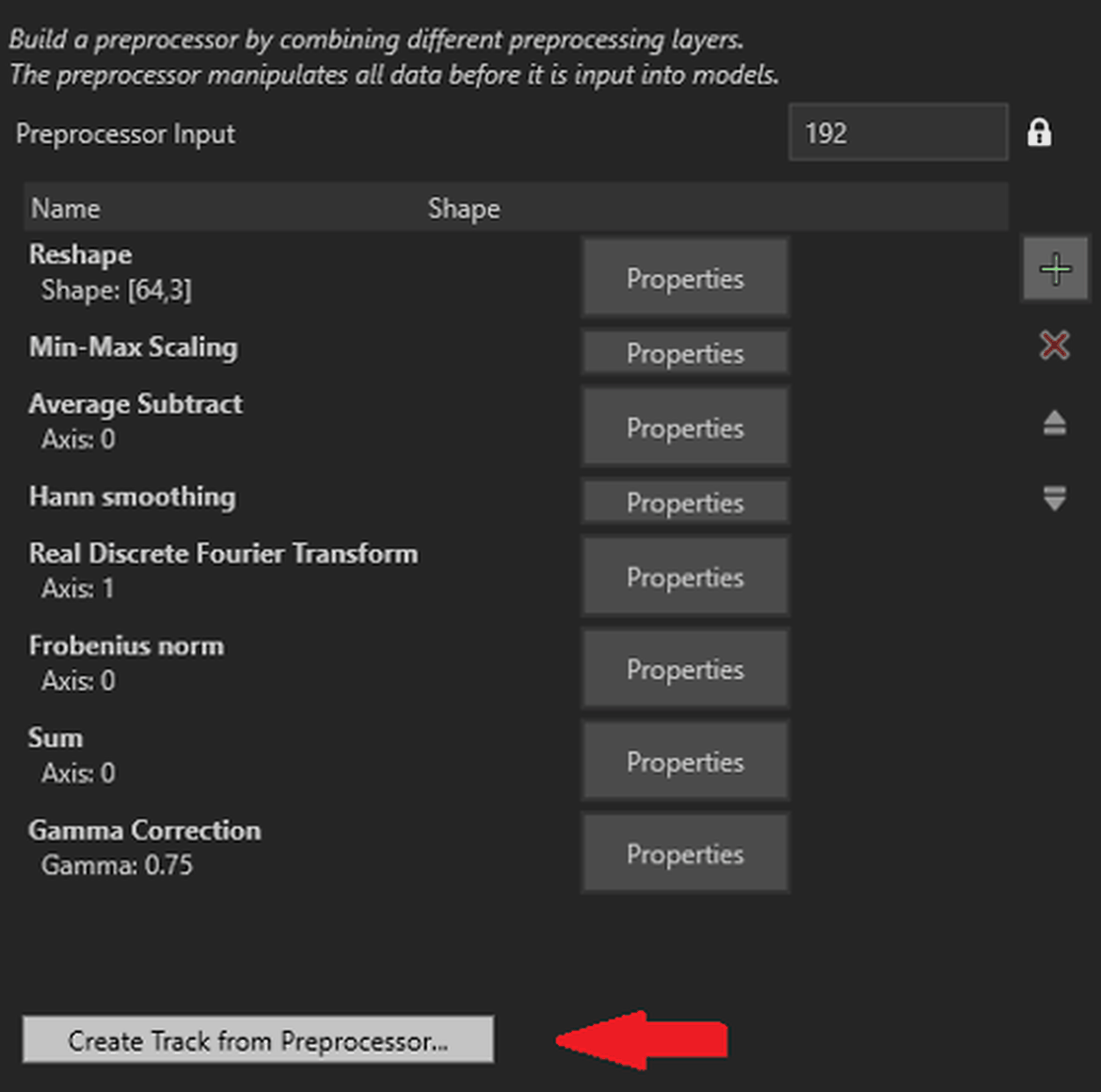

2 Add your desired pre-processing functions and hit “Create Track from Preprocessor”