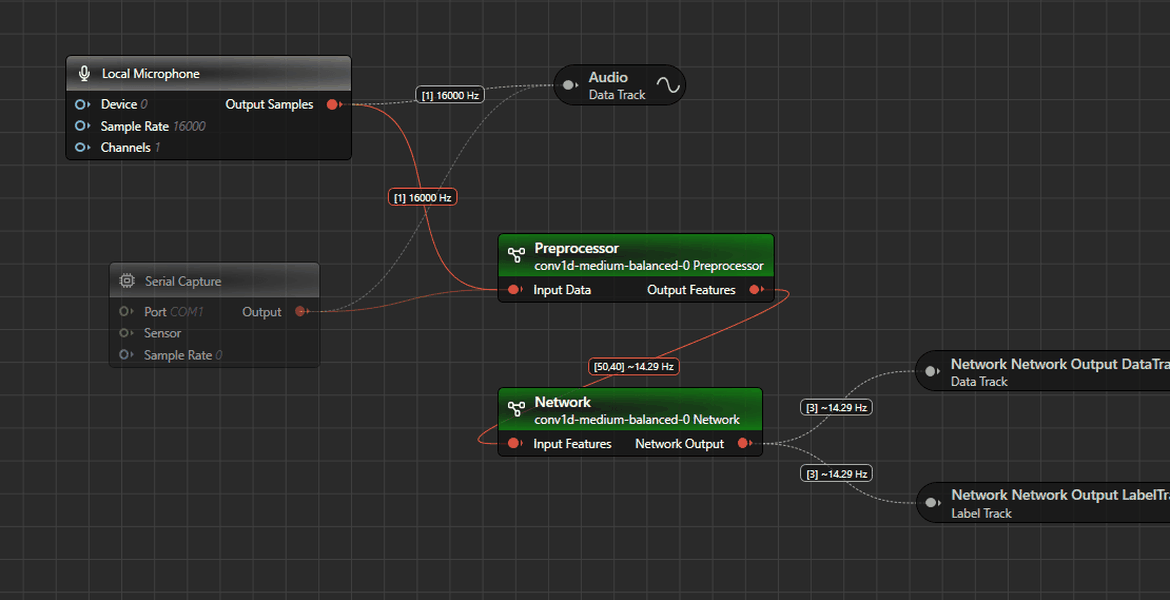

Gone are the days of top-to-bottom, fixed-pipeline workflows. Our latest Imagimob Studio release contains a major user experience upgrade we call Graph UX.

Graph UX is a visual interface designed to give you a better overview of your machine learning (ML) modeling process while offering exciting new capabilities such as built-in data collection and real-time model evaluation for edge devices

The new Graph UX allows you and your ML engineering team to gain a complete visual overview of your modeling canvas, with the ability to zoom in and out and work at different levels of complexity.

In addition to making it easier to work more efficiently as a team, Graph UX makes ML modeling projects more accessible to team members with varying skill sets and experience levels. This is thanks to the ability to work at different levels of complexity and abstraction all in the same workspace.

Imagimob Studio’s Graph UX update not only enhances user-friendliness but introduces a collection of new capabilities to the ML design process. Let’s take a closer look.

Skip the tedious work of collecting data from your device. Now you can just connect it to your PC with a USB cable, record data, and see and annotate it live.

No more "black box” dilemmas that made it difficult to evaluate and debunk models already launched on your device. Now you can connect your device to your PC and run the ML models to understand what is happening live—anywhere in the model.

We’re leaving rigid modeling procedures in the past. Now you are free to explore deeper levels of complexity and experiment with new possibilities in a fraction of the time. Run multiple models in parallel, process data in different ways, combine them however you want, and reuse things you’ve already done without having to rewrite the same code twice.

Machine learning is about more than the trained model. It is about the complete model—a combination of data processing, modeling, and model output filtering. Graph UX simplifies these parts of the modeling workflow and gives users greater insight into how to get more performance out of their models on the edge.

Be ready for what is coming. Graph UX supplies a vital foundation for cutting-edge industry developments such as advanced models and hardware with multiple ML accelerators and processing units, like Infineon’s PSOC™ Edge.

This first release of Graph UX covers Data collection, Evaluation, and Code Generation. In future releases, we plan to cover the entire end-to-end workflow step-by-step including Data management, Data cleaning, Augmentation, and Training.

Imagimob Studio’s Graph UX update is now live. The update is automatic for existing users.

Haven’t discovered Imagimob Studio yet? Click here to download.

Or get in touch with us at .