As the pandemic has swept over the world, companies has searched for ideas on how to reducing the risk. Gesture control (aka gesture recognition) and touchless user interfaces have become a hot topic and meant a revolution in terms of technology.

Both provide the ability to interact with devices without physically touching them. In addition to rising hygiene awareness, another primary driver of the touchless gesture control market today is a demand for lower maintenance costs. Gesture control can be used in various amazing applications in automotive, industry or gaming.

Here, we’ll explore the different types of gesture control technologies, provide some examples of how they are used in specific cases, and explain why, in our opinion, radar powered by Edge AI stands out above the rest for certain use cases.

Gesture control is both a topic of computer science and language technology, where the primary goal is the interpretation of human gestures via algorithms.

Gesture control devices have the ability to recognize and interpret movements of the human body, allowing users to interact with and control a system without direct physical contact. Gestures can originate from any bodily motion or state, but normally originate from the hand.

There are several different types of touchless technologies used today to enable devices to recognize and respond to gestures and movements. These range from cameras to radar, and they each come with pros and cons depending on the application.

Many gesture control applications use a camera as input. In fact, there are already a number of products available on the market that use smartphone cameras to build mobile apps with gesture control features.

In the automotive sector, BMW has led the way, featuring gesture control in some of their latest models. Their solution allows drivers to control select functions in the infotainment (iDrive) system by using hand gestures which are captured by a 3D camera.

Located in the roof lining, the camera scans an area in front of the dashboard to detect any gestures performed. Various functions can be operated, depending on the equipment.

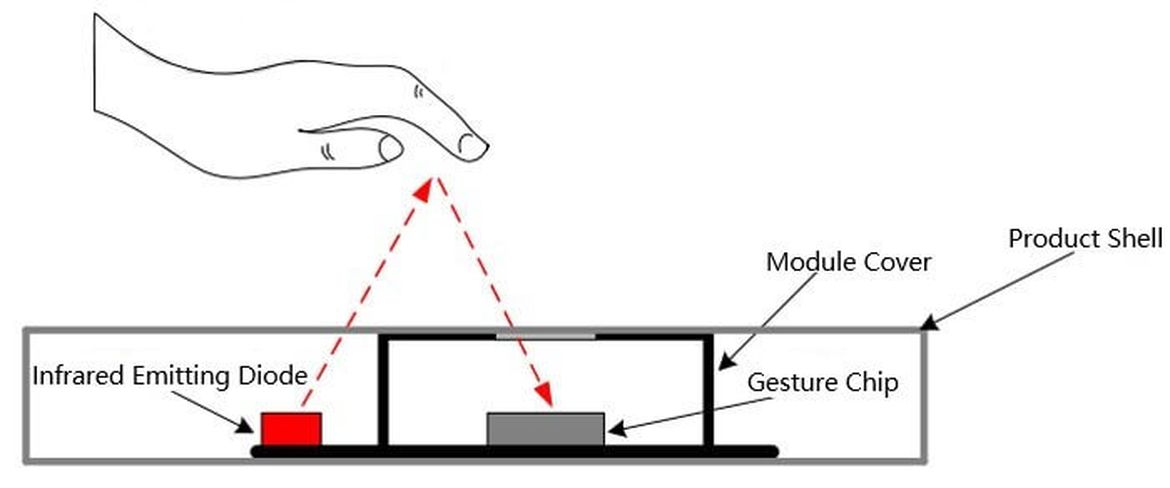

An infrared (IR) sensor is an electronic device that measures and detects infrared radiation in the surrounding environment. Anything that emits heat gives off infrared radiation. IR is invisible to the human eye, since its wavelength is longer than that of visible light.

There are two types of infrared sensors: active and passive. Active infrared sensors both emit and detect infrared radiation, while passive infrared sensors only detect infrared radiation.

Active IR sensors have two parts: a light emitting diode (LED) and a receiver. When an object gets close to the sensor, the infrared light from the LED reflects off of the object and is detected by the receiver. Active IR sensors make excellent proximity sensors, and are commonly used in obstacle detection systems.

Due to the ability to detect a variety of simple gestures at a low cost, infrared sensing technology can be a good match for many industrial, consumer, and automotive applications.

Of all the touchless gesture control technologies in the world, radar is the most robust. Radar has some unique properties. One is that it is extremely accurate, even the tiniest of motions or gestures can be detected by a radar device.

Another unique property of radar is that it works through materials, such as plastic and glass. Furthermore, with radar, there are no lenses that can become dirty—which is not the case with cameras or infrared technologies. The business possibilities are endless.

If a camera or infrared sensor become dirty, it doesn’t work. In fact, cameras often face many of the same limitations we find with the human eye. They require a clear lens in order to see properly, limiting where you can position them, and they don’t always provide a crisp or reliable picture in bad weather, particularly in heavy rain or snow.

We believe that gesture control using radar is a great solution that can be applied in many use cases. In-ear headphones are one great example, and there are many other excellent application examples in consumer electronics, automotive, and industry 4.0.

There are a couple of known projects that use radar for gesture control. For instance, Project Soli by Google was announced at the Google I/O developers’ conference back in 2015. Project Soli includes a radar sensor and gesture control software, and was launched commercially in the Google Pixel 4 smartphone in 2019.

Sensors capture the data, but, of course, in order for gesture control to work, that data needs to be decoded. Today, the vast majority of software for processing and interpreting sensor data is based on traditional methods which include transformation, filtering, and statistical analysis.

Such methods are designed by humans who, referencing their personal domain knowledge, are looking for some kind of “fingerprint” in the data.

Quite often, this fingerprint is a complex combination of events in the data and machine learning is needed to successfully resolve the problem.

To be able to process sensor data in real time, the machine learning model needs to run locally on the chip, close to the sensor itself—usually called “the edge.” This concept is called Edge AI or tinyML.

In the pursuit of creating radically new and creative gesture control embedded applications, we found a great match in Acconeer, a leading innovator in radar sensors.

Both Imagimob and Acconeer share the mission of supplying solutions for small battery-powered devices, with extreme requirements on energy efficiency, processing capacity, and cost.

Acconeer produces the world’s smallest and most energy efficient radar sensor, the A1. The data from the sensor contains a lot of information and, for advanced use cases, such gesture control, complex interpretation is needed—a perfect task for Imagimob’s ultra-efficient Edge AI software. The most radical gesture definately requires Edge AI.

With its small size, the sensor could be placed inside a pair of headphones, and the gestures could function as virtual buttons to steer the functionality, which is usually programmed into physical buttons.

In 2020, we decided to create something that was not yet seen in the market. We decided to build a fully functional prototype of gesture-controlled in-ear headphones together with Acconeer and OSM Group (an ODM).

Gesture control is a perfect fit for in-ear headphones, since the earbud is small and invisible to the user, which makes physical buttons difficult to use.

This project elaborated on our original concept to include a selection of MCU and developing firmware, while still keeping it so small that it will fit into the form factor of in-ear headphones.

The result? The end product—and the great step forward in the exciting future of touchless technology it represents is now available as fully working prototypes that can be tested by customers running Spotify on a mobile phone. The prototypes are convenient to use in people's everyday life.

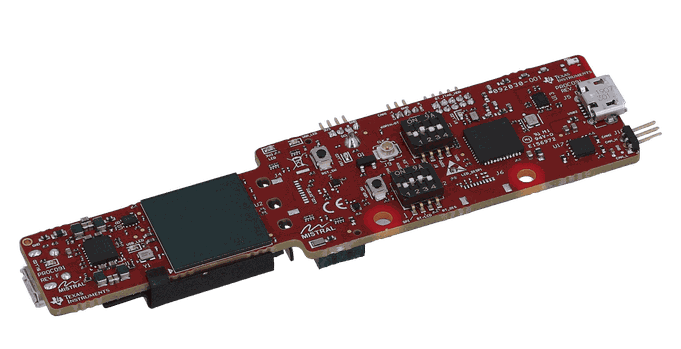

Different radars have different properties, where we successfully demonstrated a full functional true wireless in-ear earbuds with the Acconeer radar, we pursued a more industrial and automotive applications using the Texas Instruments radar. There we took on a new challenge, that of comparing and building models for opposing gesture pairs.

We began working on the TI radar and completing an integration with Imagimob AI in Q2 of 2021. We created a data pipeline solution that streamlines and simplifies the process of data collection.

Using this we built a simple model that identifies one of four classes (2 gesture pairs). Clockwise and counterclockwise finger rotation and left and right hand swipes.

The end result is a starter project that allows for new users to get up and running, building and testing models in minutes. The starter project features a lightweight model that can run efficiently on the TI radar firmware and differentiate between opposing gestures that would typically be difficult to solve.

Download the starter project and you get the python tools for swift collection of data as well as pre-existing data and models so that you experiment with building and testing existing models. Finally you get a testing tool that allows you to access test the python models in real-time on your device.

Imagimob has integrated the Acconeer radar and the TI radar in Imagimob AI, which is a development platform for machine learning on edge devices. We have developed content packs for both radars that makes it possible to get started with gesture control applications in minutes. Learn more about Imagimob AI and sign up for a free account here.